Two years ago, OpenAI introduced ChatGPT, revolutionizing communication and workflows across industries, including content generation and customer service. This rise of AI-generated text prompted the need for authenticating AI-produced content, leading to the development of AI-based text identification tools. However, this has also sparked the creation of Humanize AI technologies, setting the stage for a technological arms race.

I. The Development of AI Text Detection

Generative AI tools like ChatGPT have led to the creation of AI text detection tools to ensure text originality and prevent academic misconduct. These tools are vital for maintaining content authenticity in academia, media, and business sectors.

Key Detection Tools:

- GPTZERO: Specializes in detecting AI-generated text by analyzing its complexity and structure. It is known for its high accuracy and user-friendly interface, though it can misjudge complex texts.

- Copyleak: Excels in English contexts with a detection accuracy of 98%. It uses advanced semantic analysis to identify AI-generated text and provides detailed detection reports.

- Originality AI: Detects both AI-generated text and plagiarism. It is favored by educational institutions and media companies for its comprehensive capabilities and user-friendly interface.

Overall, AI text detection tools have significantly improved in accuracy and functionality, becoming essential for content creators and reviewers.

II. The Rise of Humanize AI Technologies

As AI text detection tools evolve, Humanize AI technologies have emerged to bypass these measures. These tools transform AI-generated text to mimic human writing styles, aiming to evade detection.

Key Humanize AI Technologies:

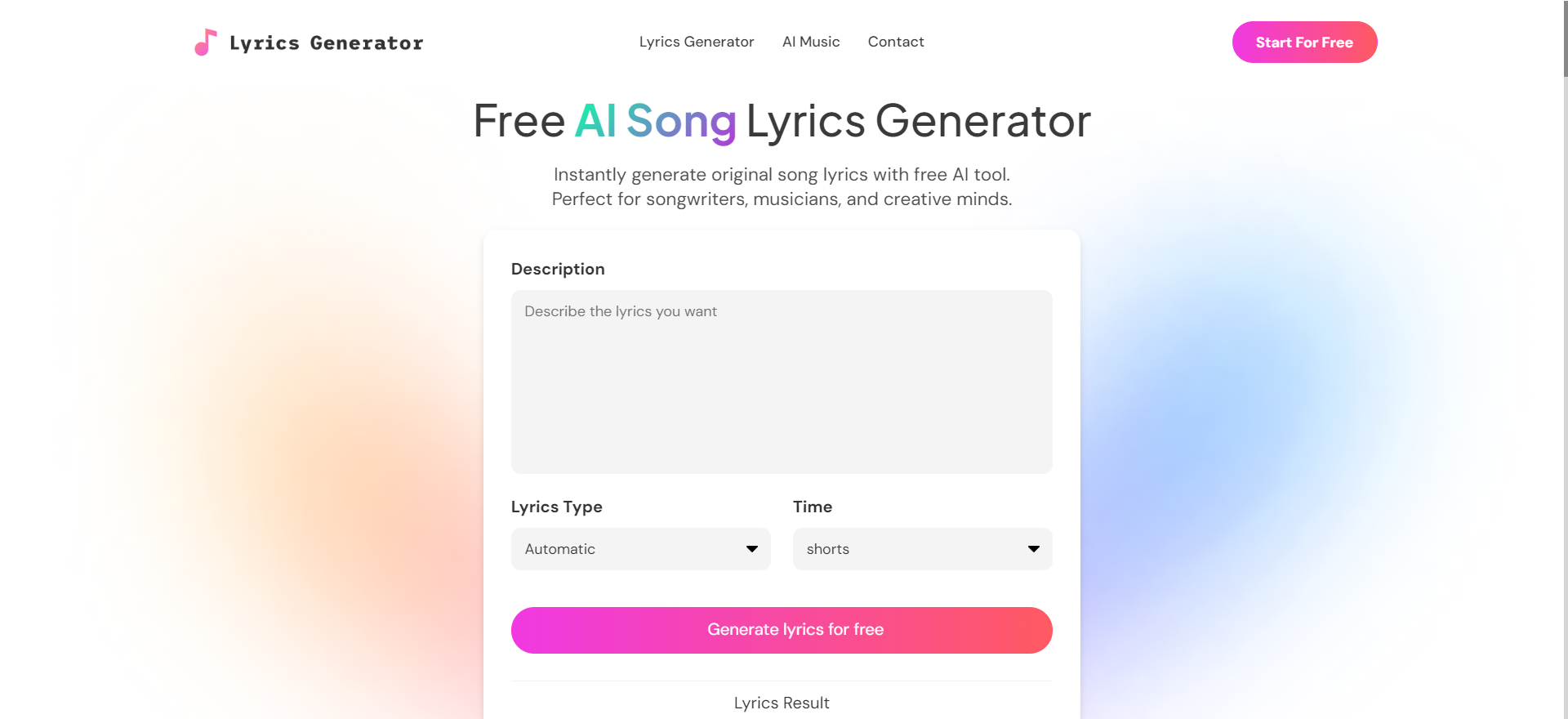

- Undetectable AI: Rewrites AI-generated text into human-like content, using advanced natural language processing to adjust word choice and sentence structure.

- Humanize AI Text: Converts AI-generated text into natural and fluent human language, suitable for various uses, including academic papers and business reports.

Market research indicates a wide range of AI Humanizer products, with varying quality. High-end tools like Undetectable AI and AI Humanizer are recognized for their effectiveness but still achieve only a 50-60% success rate in evading detection, highlighting the ongoing challenge in perfecting these technologies.

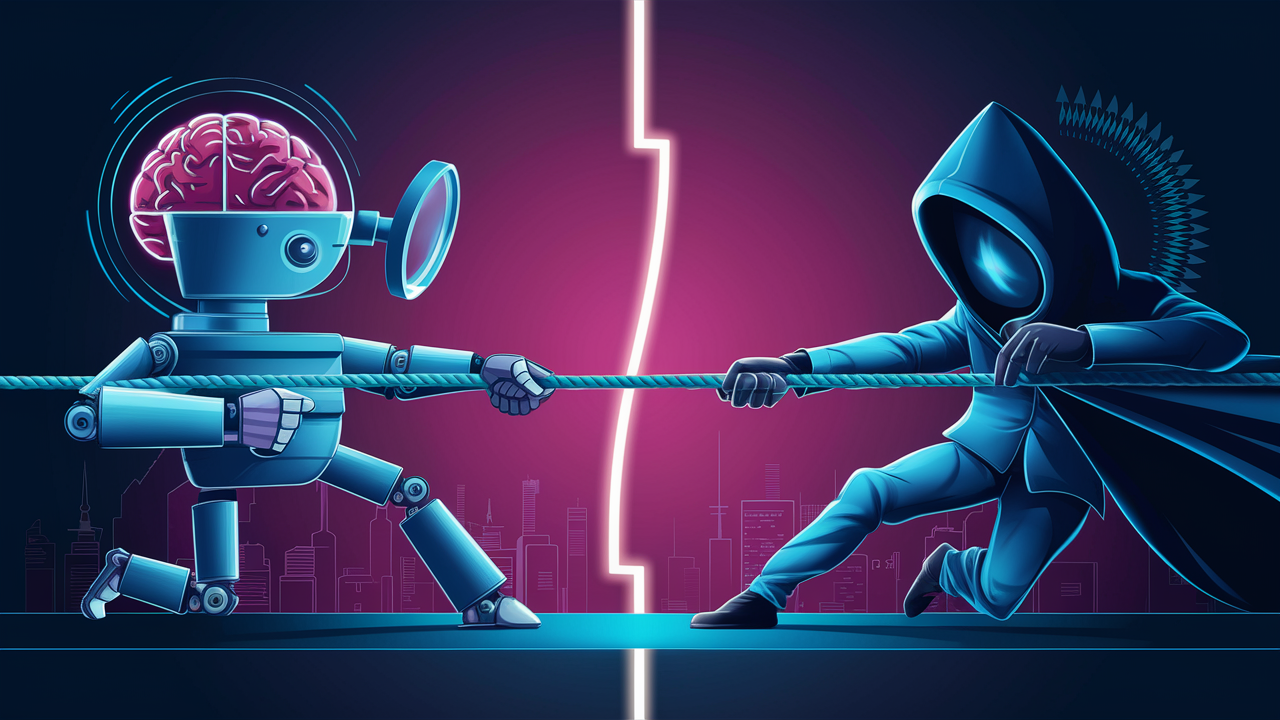

III. The Battle Between AI Text Detection and Humanize AI Technologies

The conflict between AI detection and Humanize AI technologies resembles a “technology arms race.” Detection tools enhance algorithms and expand data to improve accuracy, while Humanize AI tools use advanced techniques to mimic human writing and avoid detection. This battle unfolds in academia and business, where detection tools scrutinize text, and Humanize AI tools attempt to evade this scrutiny.

Technically, AI detection tools analyze text complexity, syntax, and word frequency. For example, GPTZERO detects AI text by examining perplexity levels. In response, Humanize AI tools adjust these factors to make the text appear more human-like, employing techniques such as reordering sentences and modifying grammar.

This ongoing tug-of-war drives the iteration and upgrade of both detection and Humanize AI tools. Despite the advancements, Humanize AI tools often produce text with grammatical or logical errors, impacting quality. The future of this technological cycle remains uncertain, with the potential for continuous growth and optimization in AI and language processing technologies.

As AI continues to shape content creation, maintaining rational thinking and choosing tools wisely are crucial. This article aims to provide insights into the current dynamics of AI text detection and Humanize AI technologies.